🇺🇸 English

Building a Polymarket Bot, Part 3

Dec 23, 2025

📒 Docs · ☎️ Telegram · 𝕏 @ZEITFinance · 𝕏 @autonomous_af

← Previous: Part 2, Selection is Performance Engineering

ZEIT FINANCE · Building a Polymarket Bot

Part 3 — The Local Mirror

Introduction

In Part 2, we architected the Scanner to curate a high-quality tradable universe. Now, we move to the core of the execution engine.

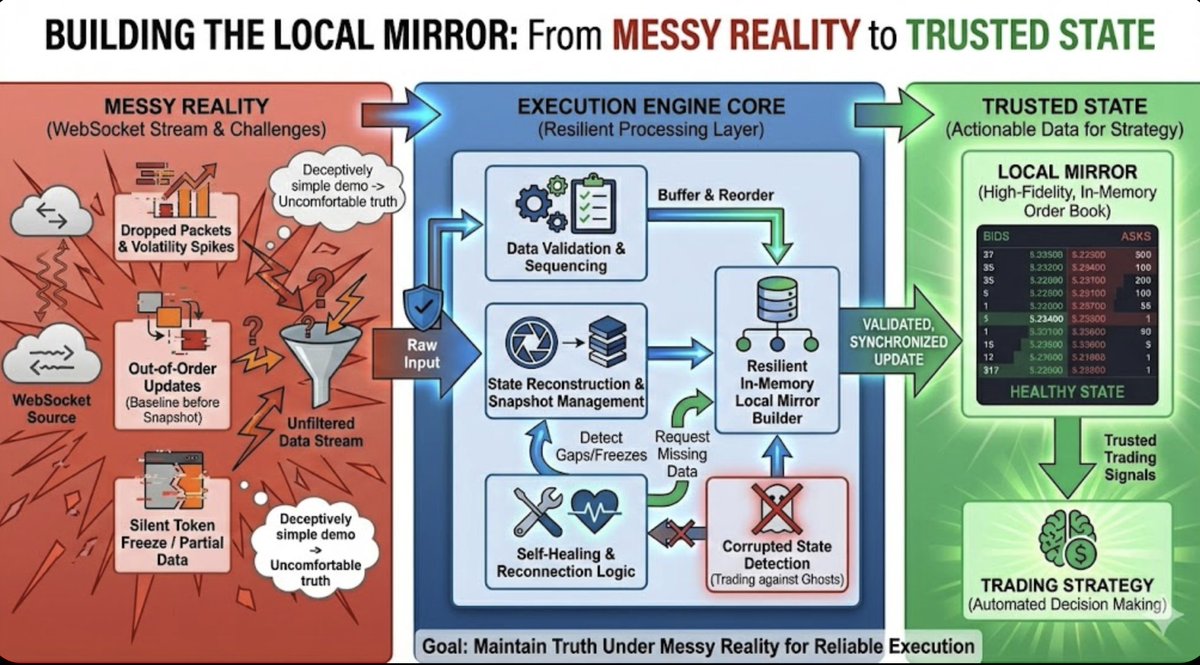

WebSockets often look deceptively simple in a demo: you connect, subscribe, and watch the JSON events roll in. But the first time you wire this data stream into an automated trading system, you encounter an uncomfortable truth:

Real-time trading isn't about "getting messages." It is about maintaining truth under messy reality.

Messy reality is a dropped packet during a volatility spike. It is an update arriving before its baseline snapshot. It is a single token silently freezing while the rest of the connection looks healthy.

If your bot trades on this corrupted state, you aren't just missing fills—you are manufacturing fake edges and trading against ghosts.

This article details the engineering required to build the Local Mirror: a resilient, self-healing, in-memory representation of the order book that your strategies can actually trust.

The Local Mirror transforms messy WebSocket reality into trusted, actionable state

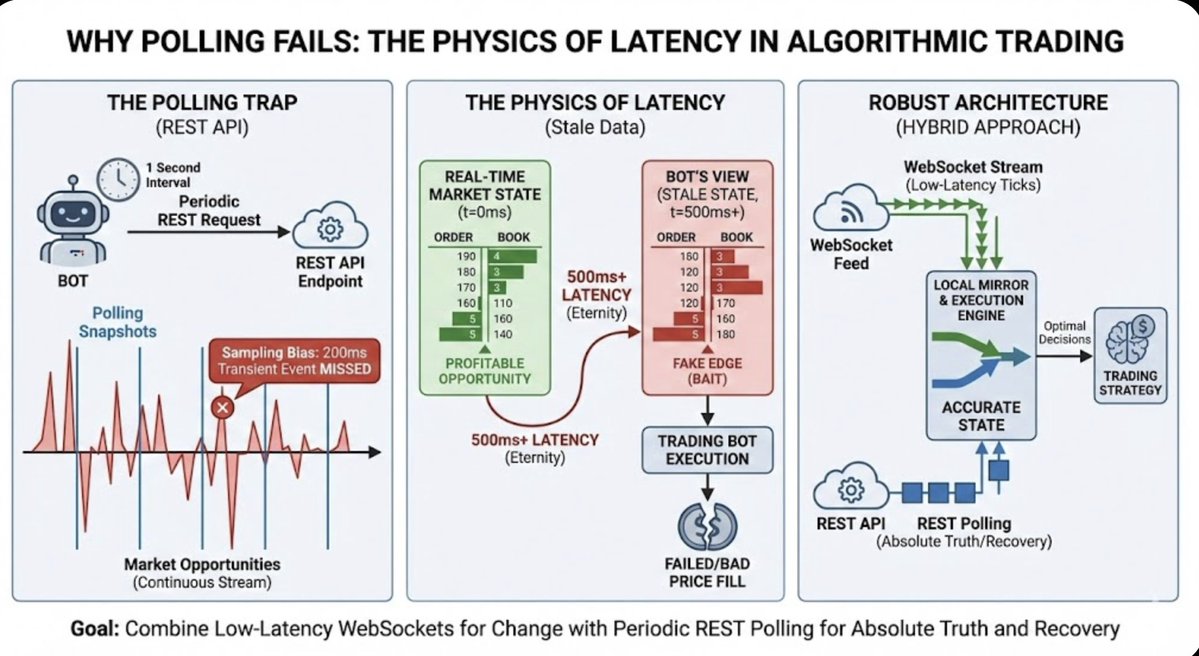

1. Why Polling Fails (The Physics of Latency)

A common instinct when building a bot is to rely on simple polling: just hit the REST API every second to get the latest prices. While this works for a user interface, it is fatal for an algorithmic trader.

The failure of polling is a matter of physics. Market opportunities often decay faster than any reasonable polling interval allows you to react.

If you poll every second, you are routinely making decisions based on state that is 500ms old—an eternity in high-frequency environments. In arbitrage setups, that stale data is often indistinguishable from a profitable opportunity, baiting your bot into executing orders that will inevitably fail or fill at bad prices.

The Sampling Bias Trap

Markets are continuous streams, not discrete snapshots. A massive liquidity injection or a spread collapse might exist for only 200ms. A polling architecture will miss these transient events entirely, while a WebSocket-based system captures every tick.

💡 The Solution: The most robust architecture doesn't choose between them. It uses WebSockets for low-latency change and REST polling for absolute truth and recovery.

The physics of latency: polling creates sampling bias and stale data that bait your bot into bad trades

2. The Mental Model: The Pipeline

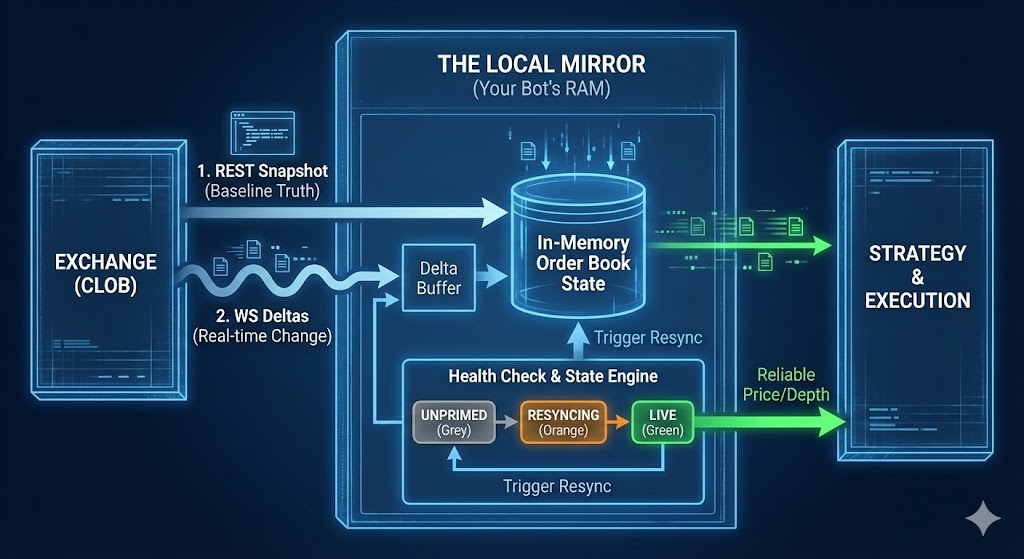

Forget "WebSocket Client" as the product. The product is a continuously trustworthy in-memory order book.

To be considered trustworthy, your internal book must be:

No crossed prices, negative sizes, or invariant violations

Reflecting the current millisecond state of the exchange

Capable of automatic healing and resyncing from any failure

This requires viewing your data ingestion not as a simple listener, but as a state management pipeline:

The pipeline starts by fetching a REST Snapshot to establish baseline truth. Once primed, it applies WS Deltas to keep that state current. But critically, it doesn't assume this state remains perfect. Continuous Health Checks monitor for divergence, and if any drift is detected, a Resync is triggered to wipe the slate clean and restore absolute truth.

The Local Mirror architecture: REST snapshots + WS deltas + Health Check State Engine = Reliable execution

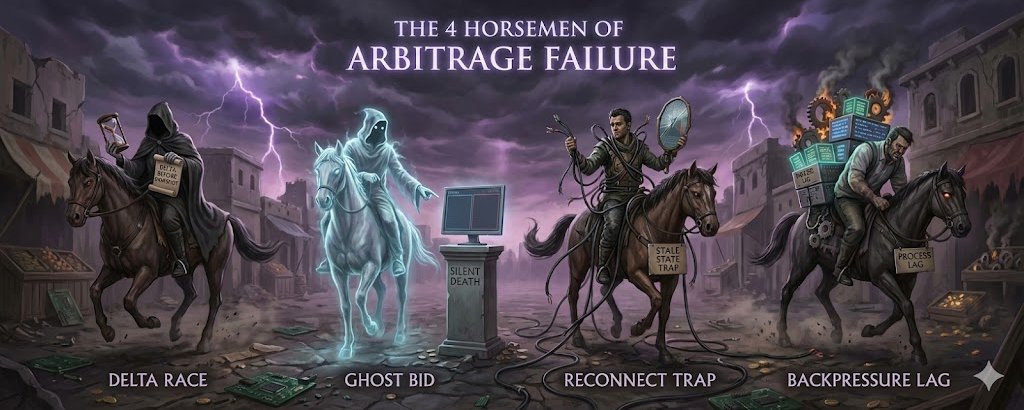

3. The Four Horsemen of Arbitrage Failure

⚠️ If you treat connection failures as edge cases, your bot will eventually lose money. In a production environment, the following failure modes are effectively guaranteed to happen.

🏇 Horseman #1: The "Delta Before Snapshot" Race Condition

When you first connect, you might receive price_change events before the initial full book snapshot arrives. Applying a "change" to an empty book effectively invents liquidity that doesn't exist.

Buffer these incoming deltas in memory, applying them only after the snapshot has been processed and the book is primed.

🏇 Horseman #2: The "Ghost Bid" (Silent Death)

A WebSocket connection can appear perfectly healthy—pings are flowing, and most markets are updating—while one specific token silently freezes.

If your system tracks health only at the connection level, it will miss this token-level failure. Your bot will continue trading on a "Best Bid" that disappeared five minutes ago.

Track freshness timestamps per token to detect this silent death.

🏇 Horseman #3: The Reconnect Trap

Brief disconnects are dangerous not because the connection drops, but because of what happens when it returns.

If you reconnect and simply resume processing, you have missed every update that occurred during the outage. Your book is now permanently drifted.

Treat every reconnect as a total invalidation of state: clear the memory and re-prime from scratch.

🏇 Horseman #4: Backpressure (Process Lag)

Sometimes the socket is fine, but your bot is the bottleneck. If your downstream logic (logging, database writes, complex math) is slower than the incoming data rate, a queue builds up.

You might be processing messages that are technically "valid" but are 500ms old.

Monitor `apply_lag` (the difference between message generation time and processing time) to catch this invisible latency.

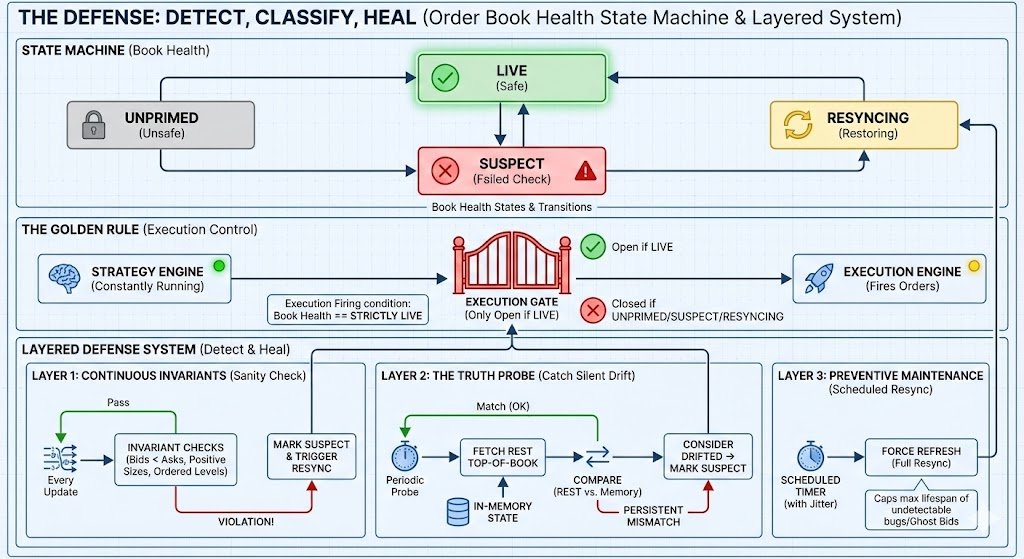

4. The Defense: Detect, Classify, Heal

To keep strategies from executing on garbage data, we treat the book's health as a formal state machine.

Initial State

Safe to Trade

Halt Execution

Recovering

Your strategy engine can run constantly, but your execution engine must only fire when the relevant books are strictly LIVE.

We enforce this via a layered defense system:

The complete defense system: State Machine + Golden Rule + Layered Defense

Layer 1: Continuous Invariants

Every single update triggers a sanity check:

| ✓ | Are bids lower than asks? |

| ✓ | Are all sizes positive? |

| ✓ | Are the levels ordered correctly? |

If any mathematical invariant is violated, we don't try to patch it. We immediately mark the book SUSPECT and trigger a full resync.

Layer 2: The Truth Probe

Internal consistency doesn't prove external reality. To catch silent drift, the system should frequently perform a lightweight "Truth Probe":

- Fetch the top-of-book price from REST

- Compare it to the in-memory state

- If they mismatch for more than a few checks → the book is considered drifted

Layer 3: Preventive Maintenance

Even if no errors are detected, a production system should periodically resync anyway.

By forcing a scheduled refresh every few minutes (with randomized jitter to avoid CPU spikes), you cap the maximum lifespan of any undetectable bug or "Ghost Bid."

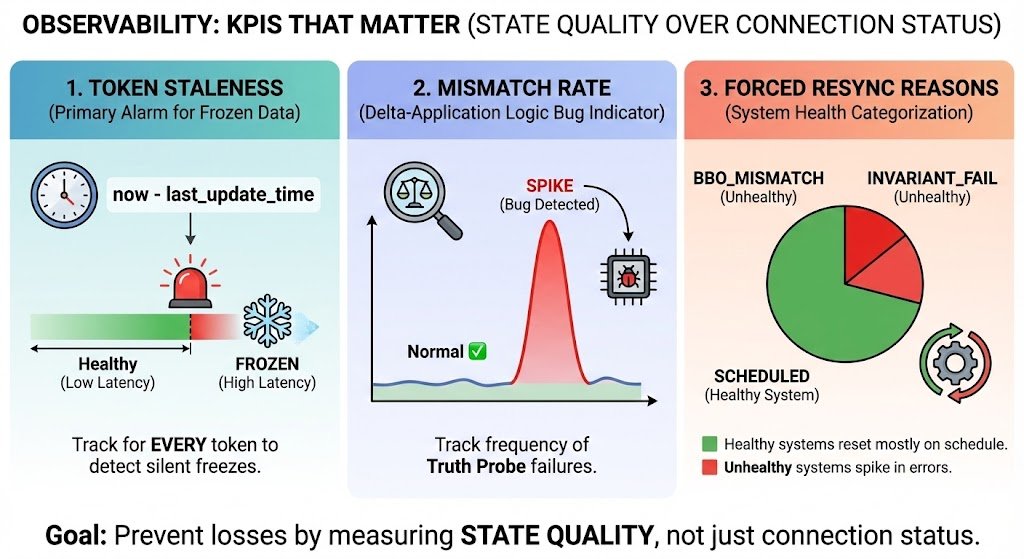

5. Observability: KPIs That Matter

If you want observability that actually prevents losses, you need metrics that measure state quality, not just connection status.

The three KPIs that prevent losses: Token Staleness, Mismatch Rate, and Forced Resync Reasons

Token Staleness

Track now - last_update_time for every single token. This is your primary alarm for frozen data.

Mismatch Rate

Track how often your Truth Probes fail. A spike here indicates a bug in your delta-application logic.

Forced Resync Reasons

Categorize why your system is resetting:

- ✅ A healthy system resets mostly due to schedules

- ⚠️ An unhealthy system spikes in

INVARIANT_FAILorBBO_MISMATCHerrors

Summary: Speed is State

The difference between a script and a trading system is state management.

By building a resilient Local Mirror that handles snapshots, deltas, and drift, you create the foundation for high-frequency decision making.

What's Next

Now that your bot has a millisecond-perfect view of the market, what does it look at?

Part 4 will cover L2 Depth and The Two-Price Model: Why "Mid Price" is a lie, and how to calculate the true weighted cost of executing your size.

Keep Building

Follow on X: @ZEITFinance

Follow on X: @autonomous_af

Join Telegram: t.me/zeitfi

Read the Docs: zeit-1.gitbook.io/zeit

Continue the Series

This article is Part 3 of the ZEIT Finance series on building Polymarket trading bots. ZEIT Finance turns prediction markets into perpetual assets.